mm_prefetch

Or when it's better to let CPU do the guesswork.

OS kernels, databases and other software that is responsible for dealing with lots of IO frequently uses prefetching to hide disk latency, which is especially important for HDDs. CPUs also use prefetching to hide memory latency, loading data that is likely to be accessed soon into caches. Normally this happens automatically, but on supported platforms and hardware, it’s possible to provide prefetch hints using instructions like prefetcht2 with help of __builtin_prefetch builtin.

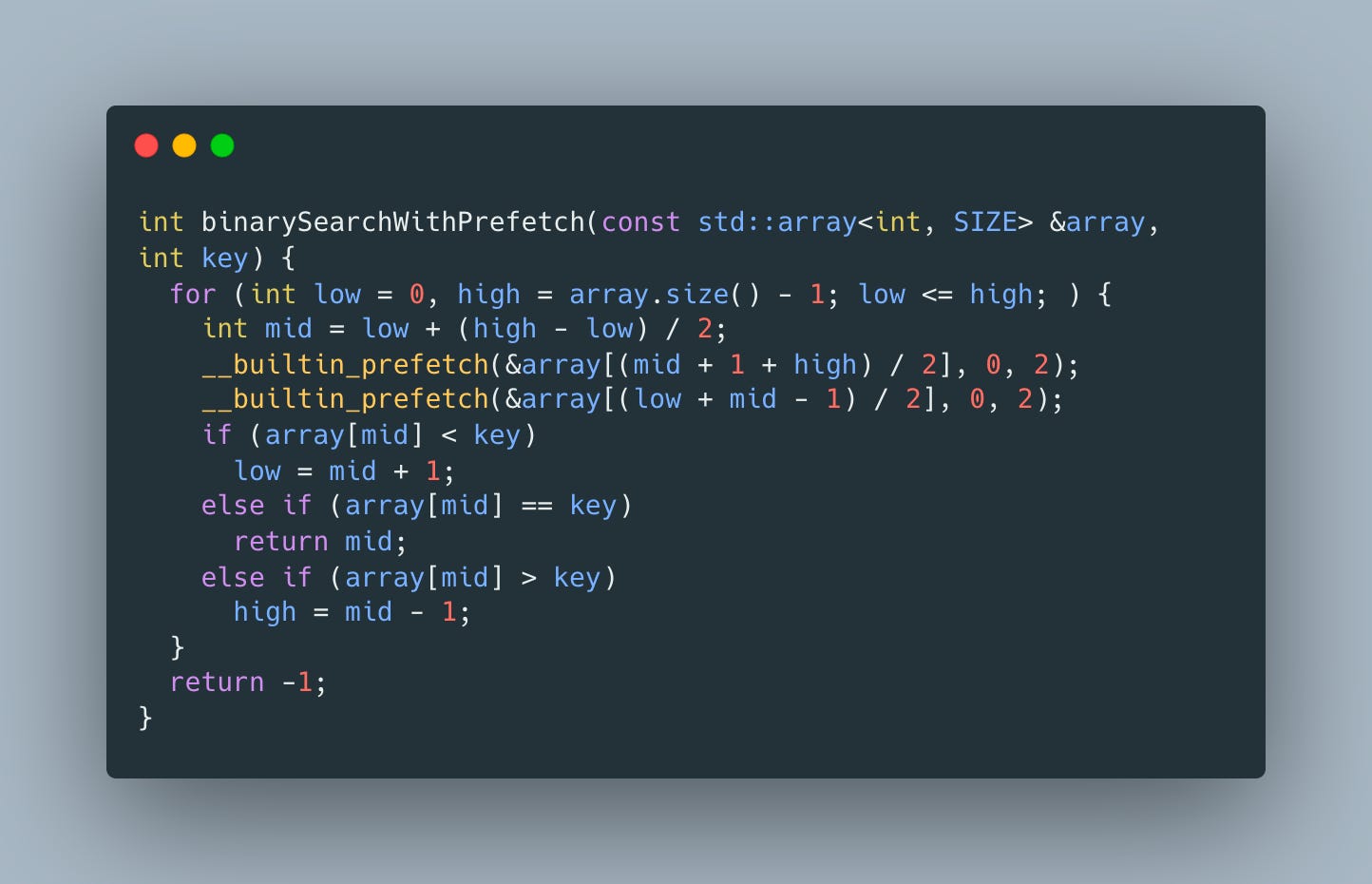

To see its impact, let’s see if we use it to reduce the impact of hard-to-predict binary search accesses by prefetching data for both potential middle elements. As a reference, we’ll use a classic implementation with 3 branches, which has an unnecessary extra branch, but is trivial to understand

and add 2 prefetch intrinsics for both potential branches

To see how much difference does it make we’ll use the following benchmarks

Unfortunately the results are not very promising

and the version with prefetching is 1.4 times slower. It’s possible to play with the third argument locality of __builtin_prefetch builtin that controls how hard should the cache try to keep the data, but none of them makes prefetching version faster than a regular one.

As such prefetching is a powerful technique but

so make sure you measure its impact for production loads before using it.