Reusing memory.

Or the easiest way to improve performance.

Vector databases are a new breed of database that are designed to store and manage high-dimensional vectors. These vectors represent the features or attributes of data, and they can be used to perform similarity search, clustering, and other machine learning tasks.

High dimensionality, data sparsity, indexing and computational complexity are just some of the challenges vector dbs have to tackle. So when I was looking for interesting insights in Weaviate DB, I didn’t expect any easy wins given how much effort went into tuning its performance. Yet, I was wrong and a trivial change to reuse an output vector

was sufficient to speed up combination of tiny by vector db standards size

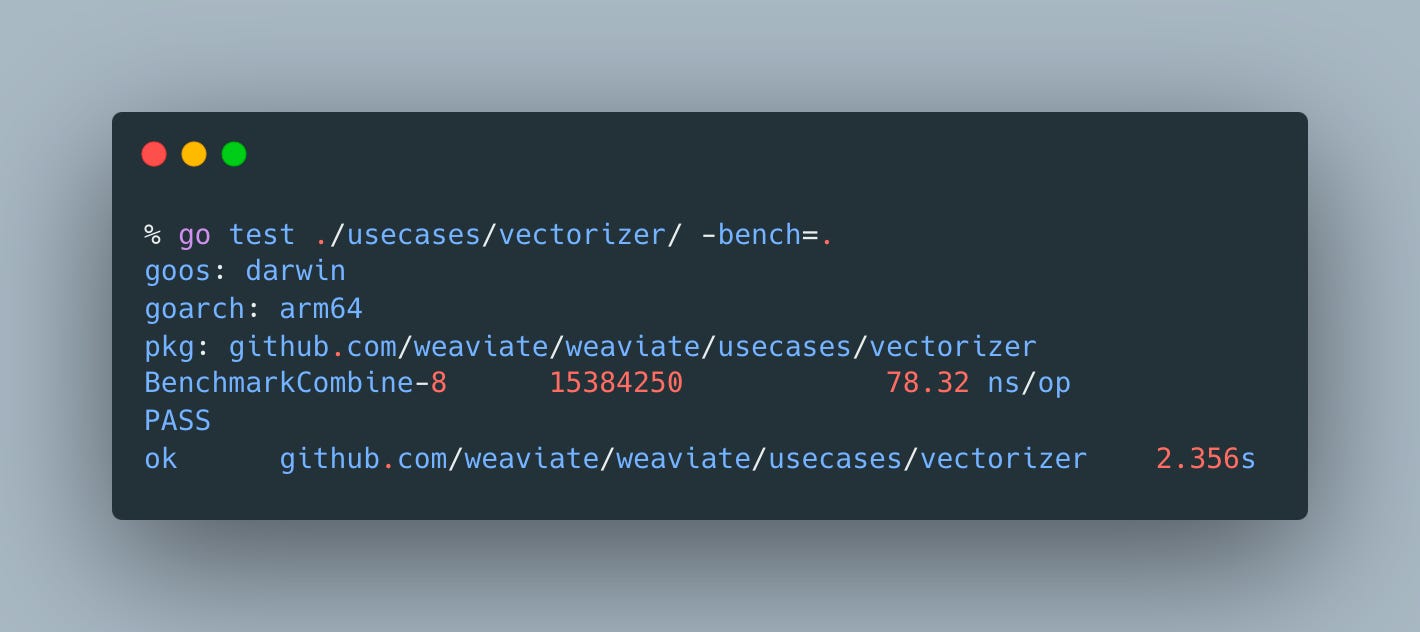

by ~12.5%:

vs original

Obviously, the wins, especially in terms of memory, can be much larger.

Frankly, I’m somewhat surprised that Golang compiler is unable to perform this kind of optimization automatically - after all it does escape analysis and with this knowledge it’s not a huge leap to reusing memory that it knows is going to be collected.

This case is an example of a trivial reuse, but there are many other, more interesting approaches including object pooling with sync.Pool and thread local memory.

"In place" algorithms are typically faster. But one has to avoid making code changes that accidentally violate data dependencies in loops and algorithms overall, e.g. when the right-hand side suddenly consumes an updated value as a result of the code optimization. But in a few cases we can make this "mistake" on purpose to get more accurate algorithms! For example, Gauss Seidel iterations (reuse updated values that are converging) versus Jacobi iterations (no reuse).

Funny that reusing memory appears to be controversial to some folks, judging from LinkedIn comments. Perhaps functional programming comes to mind, in which nothing is reused (at least not in the abstract!) But efficient imperative computing with numerical algorithms almost always entails implementation that reuse memory. Also local reuse is important, e.g. block-wise matrix algorithms optimize cache by improving spatial and temporal locality. Code readability is not the ultimate goal, since the algorithms are will understood and documented. Documentation is key.