Being spoiled by C++’s and Rust’s zero-cost abstraction principle, it’s tempting to expect similar behavior from other programming languages, but, unfortunately, it’s seldom the case.

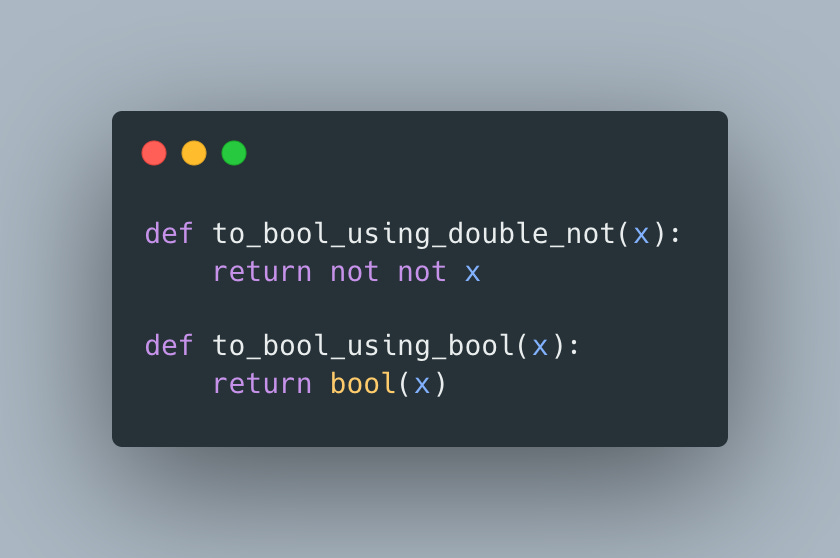

Let’s take a fairly common operation of converting an arbitrary object instance to boolean in Python. Contrary to Python’s

There should be one-- and preferably only one --obvious way to do it.

Zen, there are several ways to achieve this 2 being most common:

noting an instance converts it to the opposite to its truthiness boolean value and second not produces its original boolean equivalent. Calling bool function calls __bool__ under the hood that can be used to set user-defined logic for converting to boolean values.

At the first glance, it seems like performing double negation should be slower, since it involves 2 operations, at least one of which performs conversion to boolean type, whereas calling bool should do exactly what we need. Sure, Python is not meant for compute-intensive tasks and this type of conversion is not likely to be a performance bottleneck, but just out of curiosity, let’s put our hypothesis to the test:

Ouch, looks like bool function is more than 10X slower! To understand why, let’s take a look at Python’s bytecode for these functions:

It reveals that boolean negation is performed using a designated UNARY_NOT instruction, whereas bool function invocation involves CALL_FUNCTION instruction, which explains performance difference.

Even though Python is not a programming language of choice for CPU intensive applications, serves as a good reminder about potentially non-obvious ways syntactic structures are supported by interpreters or compilers.

interesting. And what do you think about LOAD_GLOBAL and the GIL nature of Python? do you think it also contributes to the overall sluggishness ?